Converting JSON-LD schema.org RDF to other vocabularies

So that we can use tools designed around those vocabularies.

Once you've got data in any standardized RDF syntax, you can convert it to use whatever namespaces you want.

Last month I wrote about how we can treat the growing amount of JSON-LD in the world as RDF. By “treat” I mean “query it with SPARQL and use it with the wide choice of RDF application development tools out there”. While I did demonstrate that JSON-LD does just fine with URIs from outside of the schema.org vocabulary, the vast majority of JSON-LD out there uses schema.org.

Some people fret about the “one schema to rule them all” approach. I don’t worry so much because one of the great things about RDF is that once you’ve got data in any standardized RDF syntax, you can convert it to use whatever namespaces you want. Today we’ll see how I did this so that I could load JSON-LD schema.org metadata from my blog into a SKOS visualization tool.

I also mentioned last month that the Hugo platform that I recently started using for my blog, in its default configuration, automatically generates JSON-LD metadata about my blog entries. The old Movable Type platform that I formerly used let me assign categories and tags to the entries, so when I migrated the old entries I brought those along.

Here is an excerpt of some metadata from one of my blog entries after I converted it from JSON-LD to Turtle:

[ a schema:BlogPosting ;

schema:author "Bob DuCharme" ;

schema:datePublished "2019-02-24 10:45:30 -0500 EST"^^schema:Date ;

schema:description "A quick reference." ;

schema:headline "curling SPARQL" ;

schema:inLanguage "en" ;

schema:keywords "SPARQL" , "curl" , "Blog" ;

schema:name "curling SPARQL" ;

schema:url <http://www.bobdc.com/blog/curling-sparql/> .

] .

I wanted to convert that to RDF that met three conditions:

-

Instead of using a blank node as the subject, use the

schema:urlvalue included with the data. -

Define SKOS concepts for each

schema:keywordsvalue that the metadata uses. -

Use Dublin Core properties to connect as much of the metadata as possible, including the SKOS concepts, to the posting.

This SPARQL query made this all quite straightforward:

# convertTriples.rq

PREFIX schema: <http://schema.org/>

PREFIX dc: <http://purl.org/dc/elements/1.1/>

PREFIX skos: <http://www.w3.org/2004/02/skos/core#>

CONSTRUCT {

?url dc:title ?name ;

dc:creator ?author ;

dc:description ?description ;

dc:subject ?kwURI .

?kwURI a skos:Concept ;

skos:prefLabel ?keyword .

}

WHERE {

?entry schema:url ?url ;

schema:name ?name ;

schema:author ?author ;

schema:description ?description .

OPTIONAL {

?entry schema:keywords ?keyword .

BIND(URI(concat("http://bobdc.com/tags/",?keyword))

AS ?kwURI)

}

}

The WHERE clause, as always, grabs the needed values. Instead of assuming that every blog entry has keywords assigned, I put the part that handles those inside of an OPTIONAL clause.

That part also creates a URI from each schema:keywords value to be the identity for the SKOS concept built from that keyword. To do that, I originally concatenated the values onto the base URI http://bobdc.com/blog/kwords/ that I just made up, but when I noticed that Hugo creates pages for each keyword at http://www.bobdc.com/tags/ I realized something nice: instead of a base URI that I made up from scratch, using the Hugo-generated one would give me dereferenceable URIs for the SKOS concepts. (For example, my earlier blog entries about DBpedia are assigned the keyword “dbpedia”, which becomes the URI http://www.bobdc.com/tags/dbpedia of a concept about DBpedia, and you can click that URI to see a list of those blog entries.) So I used that as the base URI when creating the URI for each new SKOS concept.

The CONSTRUCT clause follows through on the tasks listed in my bulleted list above.

SKOS is typically used to arrange topics into hierarchies, so that if for example your SKOS vocabulary says that “collie” has a broader value of “dog” and you’re looking for articles about dogs, you can retrieve all the ones tagged with “dog” or with any of the values in the SKOS subtree below “dog” such as “collie”. After running the query above I had a list of concepts with no hierarchy, so I created one. Of course there are GUI tools that let you click and drag to turn such a list into a hierarchy; the use of these tools is one of the reasons for converting the schema.org keyword metadata into SKOS metadata. Instead of using one of these tools, though, I found it simpler to just type out a text file with lines like this,

XSLT broader XML

XBRL broader XML

mysql broader SQL

audio broader music

bass broader music

D2RQ broader RDF

RDFa broader RDF

and then, after typing in some namespace declarations at the very top, doing a few global replacements to turn it into this:

bt:XSLT skos:broader bt:XML .

bt:XBRL skos:broader bt:XML .

bt:mysql skos:broader bt:SQL .

bt:audio skos:broader bt:music .

bt:bass skos:broader bt:music .

bt:D2RQ skos:broader bt:RDF .

bt:RDFa skos:broader bt:RDF .

Useful data modeling can sometimes be simple.

I added these triples to the result of the CONSTRUCT query above and loaded the resulting SKOS into the wonderful SKOS Play! site’s visualizer. (Pun intended?) My not-very-controlled-vocabulary had a lot of orphan elements, so to make a nicer visualization I used the following query to pull, from the result of the earlier CONSTRUCT query, only SKOS concepts taking part in some hierarchy:

# getChildrenAndParents.rq

PREFIX skos: <http://www.w3.org/2004/02/skos/core#>

CONSTRUCT {

?child ?childP ?childO .

?parent ?parentP ?parentO .

}

WHERE {

?child skos:broader ?parent .

?child ?childP ?childO .

?parent ?parentP ?parentO .

}

Nothing OPTIONAL in that query!

With the results of the first convertTriples.rq CONSTRUCT query in a file called convertedTriples.ttl and the additional skos:broader triples in the additionalModeling.ttl file, I had the Jena arq command line tool run this new query on the combined data to create something to load into SKOS Play:

arq --query getChildrenAndParents.rq --data convertedTriples.ttl --data additionalModeling.ttl > conceptTrees.ttl

arq’s ability to accept multiple --data arguments (potentially each using different RDF syntaxes!) can be very handy sometimes.

On the SKOS Play Play page, I used the local file option to upload the conceptTrees.ttl file created by the arq command line shown above. (The page includes some options that look fun to play with: Infer on subclasses and subproperties, Handle SKOS-XL properties, and Transform an OWL ontology to SKOS.)

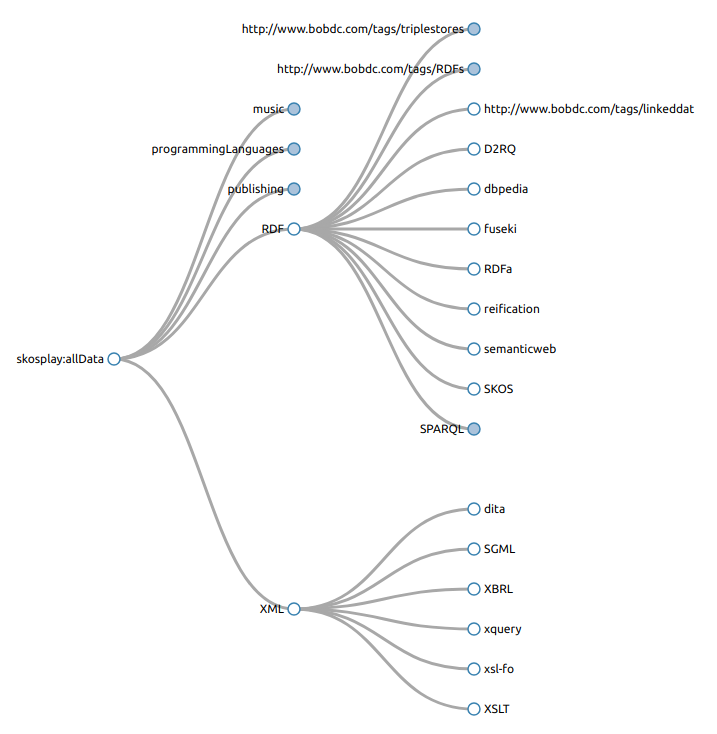

When I clicked the page’s orange Next button, the site parsed my uploaded file, told me how many concepts it found, and offered some options for how to display it. I went with the default Visualize option of Tree Visualization, which displayed the skosplay:allData node you see on the left below and the first row of nodes to the right of that. Clicking blue nodes displays their child nodes, and you can see the result after I clicked the RDF and XML ones.

SKOS concepts use URIs as their identity and skos:prefLabel values to show human-readable values in as many languages as you like. You can see that the SKOS Play diagram uses skos:prefLabel values when available, and the full URLs at the top of my diagram show that a few concepts still need skos:prefLabel values. (The convertTriples.rq query created them for most concepts.) It’s a nice example of how such tools can help us identify ways to improve our data, but of course a query for concepts that lack skos:prefLabel values would be easy enough.

I didn’t even do anything with the triples that I converted to use the Dublin Core vocabulary, but as a long-standing popular standard, there are plenty of tools out there that can work with it. They can help to make an even better case that if schema.org JSON-LD triples don’t conform to the vocabulary that you want to use, just convert them!

Share this post